As we discussed the subjects areas related to Bavardica, we can now go through the steps in the actual achievement of the project.

1. Sending a public message over the TCP network from a Silverlight client

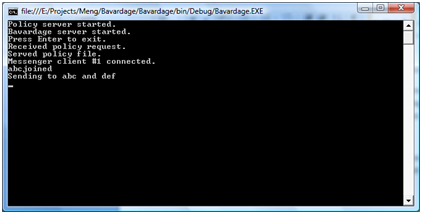

The model selected for this project is the prototyping model. Starting first with a throwaway prototype, I could first send simple TCP packets using Silverlight and a console application as a server. It was a very basic chat that let a user, say A send a message to a user B so that no one else with receive the message apart from A and B. The server is a .Net console application and the client is a Silverlight application hosted in an ASP.NET page.

2. First contacts with Silverlight animation

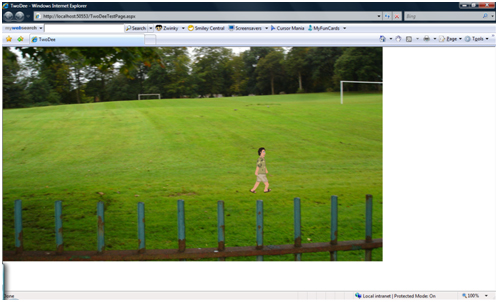

The second program I wrote was a simple Silverlight application with an animated 2d character walking around a park that was actually the background of the web page. This experiment had for sole objective the study of the motion of a character over a 2D scene.

The first key point of this prototype is the way the avatar is animated when a direction key is pressed:

The second key point is that the model used a sprite sheet or frame by frame animation. It consists of sliding horizontally a sequence of adjacent frames with a transparent background, one frame at a time when a directional key is hit.

This approach used KeyAnimationUsingKeyFrames but will be dropped later in the project as it involved no interpolation and that it would have required a lot of time drawing each character in every single position.

3. Making the server lies silently in the background (As a web service)

It was planned to integrate the two prototypes so that users could both chat and move around the 2D scene. It was also planned that the server application lies silently in a web service so that a console application need not to be kept open throughout the operation. Both goals have been reached in the next prototype.

The fact is that I thought about building a more robust server application based on which I would build an evolutionary prototype that will gradually grow with the project. That server had two main requirements:

- It had to get rid of the console application. It should just be a silent process in the background. For this purpose, I just used web services.

- The server had to push data to the clients. That is sending data even when they are not requesting. In the classic HTTP model, first the client makes a request to the server and then only the server can respond. A solution to this issue was to use the WCF (Windows Communication Foundation) Duplex web services; and that’s what I did. That’s an asynchronous two ways communication model.

It’s worth observing how the WCF Duplex web services work

While the public chat happen in a common window, one -to-one chat involves an individual window for each conversation. The organisation of multiple conversations in Bavardica comes down to tabs, using the Silverlight tab.

The Room tab is the one used for public conversations. To start a private conversation, a user just need to click on the name of the person he wants to talk to (There is a list of the available usernames right in the public room).

5. Drawing and animating characters

The next step was the design and animation of 2D avatars. There was already one avatar in the previous prototypes but it is not fun if everyone looks the same in a virtual world. Further, that particular avatar had been borrowed from some other Silverlight developer (Darren Mart). The previous model presented a lot of disadvantages. Besides the fact that a sprite sheet animation doesn’t provide interpolation between frames, that model also involves a lot of drawing. Each individual position should be designed. But the biggest issue in our context is the nonexistence of customisation in that model: one of the first non-negotiable goals in this project was the ability to mix and match clothes and other outfits as well as their colours. This task is quite simple is a 3D world. It is easier to just swap meshes and textures but in a 2D world, it’s a bit tricky to change a part of the outfit, unless we choose to draw a sheet for every conceivable combination of outlook pieces. The solution implemented in Bavardica was rather to create a set of baseline images that would collectively form a character. Therefore I ended up drawing my own characters.

So even if this is mainly a programming project, and not a graphic project, it’s worth mentioning that quite a lot of time and effort goes in drawing and animating original characters. Customization is also an important feature of a virtual world. Users should be able to choose how they look and how they are dressed. For this project, I chose to draw four basic characters (2 males and 2 females) and at least two costumes and hair styles for each character. Due to the time constraint again, I could not draw and animate more than that. The operation involves fully drawing and painting the bodies then drawing clothing over. Three basic skin colours are available and the users can change the colours of their clothes and hair. Here is a preview of one of the character:

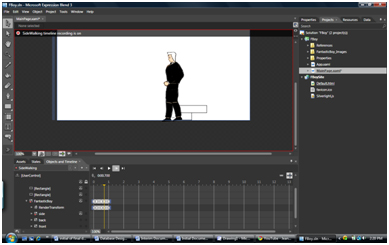

The characters are basically animated to walk, to sit and to stand. The problem with animations is that it had to be done every time from the three angles (front, back, side). There is also an animation to make them look alive even when they are standing idle. Those models used the DoubleAnimationUsingKeyFrames model by opposition to the previous one who used KeyAnimationUsingKeyFrames. Microsoft Expression Blend had been used to process the animations:

There are two main advantages to the animation system that had been used:

-Silverlight’s interpolation makes things silky smooth

-The characters are customisable. They can just shift boots or hair style (or anything else) as the users want.

Unfortunately, handling characters in a virtual world is not only about design. It involves a lot of coding. Let’s start with the creation of a character.

A few array lists are maintained, offering a variety of outfits to the user, as well as shapes and genders

According to the choice of the user, the relevant parts are exposed and the parts that are not relevant are hidden. However, the changes in colour follow another logic.

What happens here is that the source file for each part of the body is fetched from a different directory that changed according to the choice of the user. Later in the application, when the user can change colours about all the parts of their outfits, the same logic is applied.

This was about the character creation. Once the character is created, details about its outlook are stored in the database as expressed in the database design. From there, every time the user comes online, the server can send all its details to all the other clients. As soon as a client is aware of another user online, he has to represent the relevant character in his scene according to the data that had been submitted:

Setting the other characters details work as in the character creation. It’s mainly about hiding and showing images.

Now that we have seen how the code involved in characters appearance works, we will now attack the code involved in animation and motion. It starts with a key pressed by a user that is handled by Silverlight. In our example, we will consider the right key:

6. The Cybavard

After the character design and animation, an emphasis has been given to the Cybavard, the artificial intelligence part of the project. The logic behind the Cybavard is inspired from the A.L.I.C.E bot introduced earlier in this presentation. What is interesting about A.L.I.C.E is that it was based on an XML dialect called AIML (Artificial Intelligence Markup Language). Due to the universal nature of XML, it can be used with a wide variety of language. The point is that, as this is a .Net project, AIML could also fit to the Cybavards. It was all about finding a C# AIML interpreter. For this project, I used a .net library file, AIMLBot.dll (by Nicholas Toll), released under the GNU General Public License. That program offers a lot of advantages:

Very small size: about 52 k

Very fast: can process 30 000 categories in under one second

Means of saving the bot’s brain as a binary file

A simple and logical API

The inner working of this program is also fairly simple. It’s based on pattern matching: for each sentence entered, it checks in its knowledge base which stimulus response would fit best:

So what I am doing is adding a reference to the binary file from my web service, I also upload the knowledge base on the server. The knowledge based is mainly a set of AIML files that I can edit as per my requirements. I could also add my own AIML files. From the cybavard class, what I had to do was creating a bot object and loading the AIML files.

In the following part, we will go through the design of the characters.